Almost 43% percent of the world population are bilingual meaning that they can speak two languages fluently! There are some misconceptions that bilingualism can cause confusion or language delays! Nevertheless, recent research shows that mixing two languages is good for the brain.

People that speak more than one language often must switch between languages. This means that they often must suppress one language when talking in another language. For instance, when looking at a dog, a monolingual English speaker will say ‘dog’. But to a bilingual, two alternatives present themselves ‘sag’, ‘dog’. That means that bilinguals are always having to make a decision that monolinguals just don’t have to make. This mental exercise can improve the brain’s executive function. The brain’s executive function is a processor that helps us focus, remember instructions, and multitask. There is research showing that bilinguals performed better than monolinguals when exposed to situations that required higher executive function abilities. Moreover, bilingualism has been also related to the delay in the onset of Alzheimer’s symptoms in elderly adults.

In my research, I investigated whether bilinguals’ nature of processing sentences differs from how monolinguals’ understand sentences as they hear them in an ongoing speech. For instance,

- The man that pushes the boy has a red shirt

In this sentence, it is hard to understand who did what to whom compared to this sentence:

- The boy that the man pushes has a red shirt

In the first sentence which is an object relative construction, one can experience confusion or mental interference on deciding whether the man pushed or the boy pushed! Who is the subject and who is the object? This interference effect can be a good metric to see if bilinguals and monolinguals are different in dealing with the speed of understanding the meaning of a given sentence.

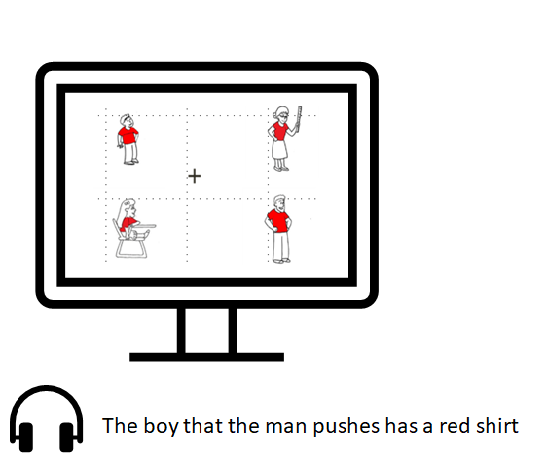

To explore this question, you need a tool that can map the ongoing mental processes in real-time. There are different types of data acquisition methods that help us measure mental processes in real-time. For instance, Electroencephalography or EEG measures the electrical activity of your brain or eye-tracking that can measure eye movement or eye-position, in other words, where the observer’s attention is held. Therefore, using eye-tracking, I could measure what people are looking for when they hear the “verb” the point in a sentence in which interference effect is happening.

I presented monolinguals and bilinguals with a series of sentences (easy and complex) in the auditory form together with a visual array that depicted the characters of the sentence. This paradigm allows us to depict what listeners are looking at as they hear the sentence in real-time. I found that when listening to sentences such as “The man that the boy pushes has a red shirt”, listeners looked at the man upon hearing the man, then they look at the boy upon it, and when they hear the “push” they went back and forth between the man and boy. Showing that the executive function is shifting between these two elements until it resolves the interference of who did what to whom.

My findings showed that this interference was resolved earlier among bilinguals compared to the monolinguals. Although this research does not indicate that bilinguals are smarter than monolinguals, it suggests that bilinguals are more efficient than monolinguals at managing interference during speech processing due to their everyday practice of juggling with two active languages in their mental state.